Download tweet_grab.py

For example let's say I am trying to download the tweets from all my contacts over the last 7 days. To do this I would use the following commands

contact_grab.py gptreb

tweet_grab.py gptreb 7

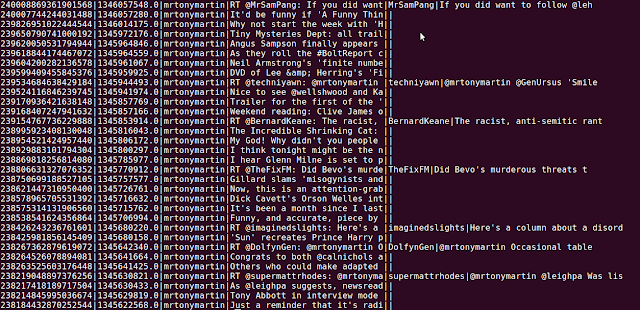

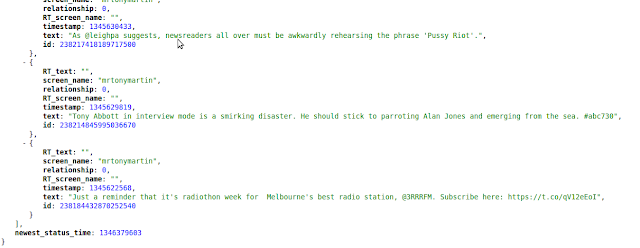

contact_grab.py creates a file with the details of all my twitter contacts. tweet_grab.py uses this file to gather all the tweets over a period of time. The command line output is a short summary of what has been returned, just to indicate the progress of the script. The output is a JSON formatted file that contains all tweets for the time period specified. I have only just started using JSON files and I am really starting to like them. In the past I've struggled with XML files and trying to parse, traverse, and search them, but JSON in python seems so simple to use. It also seems to be more compact. For future projects I will probably choose JSON over XML, but I only ever really deal with small projects and it may be a whole different story on something more complex.

After listening to an episode of The Engineering Commons about software, I've had a bit of a go in this project to handle exceptions and improve my commenting. Don't get me wrong, I've still got a long way to go, but for an electronics guy who hadn't seen python a year ago I'm not doing too bad.

My next step is to take the output file containing the tweets and do some further processing on it to derive some metrics. I don't think the next step will be too difficult though, getting the data was the hard part.

Note If your reading this after March 2013 this script won't work. From that point on Twitter requires all access to its API to be authenticated using OAuth, and this script doesn't support it. There are ways to do it, but as this is just for a bit of fun really, I don't know if I want to invest the time to get authentication working. I may revisit it in 6 months if I feel like it.

|

| A sample of the command line |

|

| A sample of the output JSON file |